Introduction of Operating System

An operating system (OS) is crucial software that manages computer hardware and software resources and provides common services for computer programs. Here’s a brief introduction to its main components and functions:

- Kernel: The core part of the OS that manages hardware, including the CPU, memory, and peripherals. It handles system calls, interrupts, and manages processes.

- Processes: The OS manages process creation, scheduling, and termination. It ensures that multiple processes can run concurrently without interfering with each other.

- Memory Management: The OS controls and allocates memory space to different programs and processes, ensuring efficient and secure use of the computer’s RAM.

- File System: It provides a way to store, retrieve, and organize files on storage devices. The file system manages file permissions, directory structures, and data organization.

- Device Drivers: These are specific types of software that allow the OS to communicate with hardware devices like printers, graphics cards, and network adapters.

- User Interface: The OS provides interfaces for users to interact with the system, which can be command-line interfaces (CLI) or graphical user interfaces (GUI).

- Security and Access Control: It manages user permissions and protects system resources from unauthorized access. This includes user authentication, file permissions, and network security.

- Networking: The OS provides networking capabilities to connect to other systems, manage network traffic, and ensure secure communication over networks.

- System Utilities: These are tools and programs provided by the OS to help with system maintenance, diagnostics, and configuration.

Popular operating systems include Microsoft Windows, macOS, Linux, and Unix. Each has its own features and strengths, tailored to different types of users and use cases.

Software can be divided into two types. they are:

Software can be broadly categorized into several types, each serving different purposes. Here’s a rundown of the main types:

- System Software: This includes the operating system (e.g., Windows, macOS, Linux) and utilities that help manage hardware and software resources. It provides the foundational environment for running application software.

- Operating Systems: Manage hardware and software resources.

- Utilities: Tools for system maintenance, such as antivirus programs, disk cleanup tools, and backup software.

- Application Software: These are programs designed to perform specific tasks or applications for the user. They range from productivity tools to games.

- Productivity Software: Includes word processors (e.g., Microsoft Word), spreadsheets (e.g., Microsoft Excel), and presentation software (e.g., Microsoft PowerPoint).

- Media Software: Includes tools for editing photos, videos, and audio (e.g., Adobe Photoshop, Adobe Premiere Pro).

- Web Browsers: Programs like Google Chrome, Mozilla Firefox, and Safari for accessing the internet.

- Games: Interactive entertainment software (e.g., Fortnite, The Legend of Zelda).

Define Operating System

An operating system (OS) is a software layer that manages computer hardware and provides common services for application software. It acts as an intermediary between users and the computer hardware, facilitating communication and operation. Here’s a more detailed definition:

- Resource Management: The OS controls and allocates the computer’s hardware resources, including the CPU, memory, storage devices, and input/output devices. It ensures efficient and fair use of these resources among various applications and users.

- Process Management: It handles the creation, scheduling, and termination of processes (running programs). The OS ensures that multiple processes can run concurrently and manages the switching between them.

- Memory Management: The OS manages the computer’s memory, allocating space to processes and ensuring that they do not interfere with each other. It handles both physical memory (RAM) and virtual memory.

- File System Management: The OS provides a way to store, retrieve, and organize files on storage devices. It manages file permissions, directories, and the overall organization of data.

- User Interface: It offers interfaces for users to interact with the computer. This can be a command-line interface (CLI), where users type commands, or a graphical user interface (GUI), which provides windows, icons, and menus.

- Security and Access Control: The OS enforces security policies to protect data and system resources from unauthorized access. It manages user accounts, passwords, and permissions.

- Networking: The OS supports network operations, allowing computers to connect to and communicate over networks. It handles data transmission, network protocols, and network security.

Overall, the operating system is essential for the smooth operation of a computer system, providing the necessary environment for applications to run and ensuring efficient use of hardware resources.

Various Types of Operating system

An Operating System performs all the basic tasks like managing files, processes, and memory. Thus operating system acts as the manager of all the resources, i.e. resource manager. Thus, the operating system becomes an interface between the user and the machine. It is one of the most required software that is present in the device.

Operating System is a type of software that works as an interface between the system program and the hardware

Single-User, Single-Task Operating System

- Description: Designed for one user to perform one task at a time.

- How It Works: The operating system handles only one application or process at a time, meaning you can’t run multiple applications simultaneously.

- Examples: Early personal computer systems and some simple embedded systems. For example, early versions of MS-DOS were single-tasking, where only one program could run at once.

Single-User, Multi-Tasking Operating System

- Description: Designed for one user to run multiple applications or tasks simultaneously.

- How It Works: The operating system manages several processes at the same time, switching between them to give the impression that all are running simultaneously.

- Examples: Modern personal operating systems like Windows, macOS, and Linux support multitasking, allowing users to run and switch between multiple applications at once. For instance, you can have a web browser open while running a word processor and listening to music simultaneously.

Batch Operating System

Definition:

A Batch Operating System processes jobs in groups (or “batches”) without direct interaction from the user. An operator collects jobs with similar requirements, groups them, and submits them for processing. The system then executes these jobs one after another efficiently.

Advantages:

- Shared Resources: Multiple users can utilize the system concurrently, improving resource utilization.

- Minimal Idle Time: The system operates with minimal idle time because jobs are processed in batches, reducing gaps between tasks.

- Efficient Management: Handling large volumes of similar jobs is straightforward as they are grouped and processed together.

Disadvantages:

- Debugging Challenges: Identifying and fixing errors can be difficult because debugging is done after the batch processing, not interactively.

- Cost: Initial setup and maintenance of batch systems can be expensive.

- Job Waiting Time: If one job fails, other jobs must wait until the issue is resolved, leading to unpredictable delays.

- Uncertain Processing Time: It’s hard to estimate how long each job will take while it’s in the queue, making scheduling and time management challenging.

Multiprogramming Operating System

Definition:

A Multiprogramming Operating System keeps multiple programs in the main memory at the same time. The OS manages the execution of these programs so that while one program is waiting for I/O operations (like reading from a disk), another program can use the CPU. This approach maximizes the use of system resources and improves overall system efficiency.

Advantages:

- Increased Throughput: By running multiple programs concurrently, the system can complete more tasks in a given time period, increasing overall productivity.

- Reduced Response Time: The system can switch between programs quickly, minimizing the time each program waits for CPU access and improving responsiveness.

- Efficient Resource Utilization: Better use of system resources, as the CPU is less likely to be idle while waiting for I/O operations.

Disadvantages:

- No User Interaction: Typically, there is no direct interaction between users and the system resources during execution, which can limit flexibility and control.

- Complexity in Management: Managing multiple programs and ensuring efficient resource allocation can be complex, requiring advanced scheduling algorithms and mechanisms.

- Resource Contention: Programs may compete for the same resources, potentially leading to inefficiencies or delays if not managed properly.

Multiprocessing Operating System

Definition:

A Multiprocessing Operating System uses more than one CPU (Central Processing Unit) to perform tasks simultaneously. By distributing processes across multiple processors, it enhances the system’s overall performance and efficiency.

Advantages:

- Increased Throughput: With multiple CPUs working in parallel, the system can handle more tasks at once, leading to improved performance and faster completion of processes.

- Fault Tolerance: If one CPU fails, the remaining CPUs can continue to operate, providing better reliability and system resilience.

Disadvantages:

- Complexity: Managing multiple CPUs adds complexity to the operating system. Synchronizing tasks and ensuring effective communication between CPUs can be challenging and difficult to understand.

- Cost: Systems with multiple processors can be more expensive due to the need for additional hardware and advanced software to manage the processors effectively.

Time-Sharing Operating System

Definition:

A Time-Sharing Operating System allows multiple tasks or users to share the CPU time on a single system. Each task or user is allocated a small time slice, called a quantum, during which it can execute. After this time interval, the OS switches to the next task. This approach, also known as Multitasking, ensures that all tasks are executed smoothly and efficiently.

Advantages:

- Equal Opportunity: Ensures that each task or user gets a fair share of CPU time, promoting fairness and efficiency.

- Reduced Software Duplication: Fewer chances of software duplication since multiple users can access the same system resources.

- Reduced CPU Idle Time: Minimizes idle time by continuously switching between tasks, keeping the CPU actively engaged.

- Resource Sharing: Allows multiple users to share hardware resources such as CPU, memory, and peripherals, reducing hardware costs and increasing efficiency.

- Improved Productivity: Users can work concurrently, reducing wait times and enhancing overall productivity.

- Enhanced User Experience: Provides an interactive environment where users can engage with the system in real time, offering a more dynamic experience compared to batch processing.

Disadvantages:

- Reliability Issues: The complexity of managing multiple tasks and users can lead to reliability problems.

- Security and Integrity Concerns: Requires careful management of user access and data to ensure security and integrity.

- Data Communication Challenges: Managing data communication between tasks and users can be complex.

- High Overhead: Involves significant overhead due to scheduling, context switching, and resource management, which can impact system performance.

- Complexity: The system’s complexity increases the likelihood of bugs and errors, requiring advanced software and management.

- Security Risks: Sharing resources among multiple users heightens the risk of security breaches, necessitating robust security measures.

Distributed Operating System

Definition:

A Distributed Operating System manages a network of interconnected, autonomous computers that communicate with each other through a shared communication network. Each computer, or node, has its own CPU and memory, and they work together as a cohesive system. This setup, known as a loosely coupled system, allows users to access files and software across the network, even if those resources are not physically present on their local machine.

Advantages:

- Independent Operation: The failure of one system does not affect others in the network, maintaining overall system reliability.

- Increased Data Exchange Speed: Electronic mail and other communication tools within the network enhance data exchange rates.

- Enhanced Computation Speed: Resource sharing among systems speeds up computation and increases durability.

- Reduced Host Load: Distributes the computational load, reducing the burden on any single host computer.

- Scalability: Easily scalable by adding more systems to the network, accommodating growing needs.

- Reduced Data Processing Delay: Improves the speed of data processing by leveraging distributed resources.

Disadvantages:

- Network Dependency: A failure in the main network can disrupt communication across the entire system.

- Undefined Standards: The protocols and languages used to establish distributed systems are not yet well-defined, which can lead to compatibility issues.

- Limited Availability: Distributed systems are complex and may not be readily available or easily implemented due to their advanced nature and requirements.

Real-Time Operating System (RTOS)

Definition:

A Real-Time Operating System (RTOS) is designed to process and respond to inputs within very strict time constraints, known as response time. It ensures that tasks are completed within a predetermined timeframe, which is crucial for systems where timing is critical, such as missile guidance systems, air traffic control, and robotics.

Types of Real-Time Operating Systems:

- Hard Real-Time Systems: These systems have strict time constraints where even the slightest delay is unacceptable. They are used in life-critical applications like automatic parachutes or airbags, where immediate response is essential. Virtual memory is rarely used in these systems.

- Soft Real-Time Systems: These systems have less stringent time constraints. Delays are permissible to some extent, making them suitable for applications where timing is important but not critical to life or safety.

Advantages:

- Maximized Resource Utilization: Ensures optimal use of devices and system resources, leading to higher output.

- Fast Task Switching: Minimizes the time required to switch between tasks, with modern systems capable of switching tasks in microseconds.

- Application Focus: Prioritizes running tasks with less emphasis on queued applications, improving responsiveness for critical tasks.

- Embedded System Compatibility: Ideal for embedded systems with small program sizes, such as in transportation and other specialized areas.

- Error-Free Operation: Designed to operate with minimal errors, crucial for applications requiring high reliability.

- Efficient Memory Allocation: Manages memory allocation effectively to meet real-time requirements.

Disadvantages:

- Limited Task Concurrency: Supports fewer simultaneous tasks to ensure timely processing, potentially limiting functionality.

- High Resource Usage: Can be resource-intensive and expensive, sometimes requiring advanced hardware.

- Complex Algorithms: Requires complex algorithms for task scheduling and management, which can be challenging for developers.

- Specific Drivers and Interrupts: Needs specialized device drivers and interrupt handling to respond quickly to events.

- Thread Priority Management: Setting and managing thread priorities can be challenging, as the system is optimized for minimal task switching.

Examples of Real-Time Operating Systems:

- Scientific Experiments: Managing real-time data collection and analysis.

- Medical Imaging Systems: Ensuring timely processing of imaging data for diagnostics.

- Industrial Control Systems: Controlling machinery and processes in manufacturing.

- Weapon Systems: Handling critical timing in defense applications.

Concept of Buffering and Spooling

Buffering in Operating Systems

Definition: Buffering is a technique used in operating systems to manage data transfer between devices or between a device and an application. It involves temporarily storing data in a memory area called a buffer or cache, which acts as an intermediary between the data source and the destination, allowing for more efficient data handling and reduced waiting times.

Concept:

- Purpose: To handle differences in speed between data-producing and data-consuming processes or devices, thereby improving overall system performance and efficiency.

- How It Works: Data is first written to a buffer before being transferred to its final destination. This allows the system to manage data transfers more effectively by reducing the time the CPU or application needs to wait for slower devices.

Advantages of Buffering:

- Reduced Waiting Time: Minimizes the time applications or processes need to wait to access data from slower storage devices by storing data temporarily in the buffer.

- Smooth I/O Operations: Facilitates smooth interaction between different devices connected to the system by acting as an intermediary storage area.

- Increased System Performance: Reduces the number of direct I/O operations needed, thereby improving the overall performance of the system.

- Efficient Data Access: Cuts down on the number of times the system needs to access the storage device, making data retrieval more efficient.

Disadvantages of Buffering:

- Memory Usage: Buffers consume additional memory, which can be significant, especially for long processes or large volumes of data.

- Unpredictable Data Size: The exact amount of data to be transferred may be unpredictable, leading to potential over-allocation of buffer space and wasted memory.

- Buffer Overflow: If more data is written to the buffer than it can handle, it can lead to buffer overflow, resulting in data loss or corruption.

Example of Buffering:

- Video Playback: When streaming a video, data is buffered ahead of playback to ensure smooth viewing. The video data is temporarily stored in a buffer so that playback can continue smoothly even if there are fluctuations in network speed or data delivery rates.

Summary: Buffering is a crucial technique in operating systems that enhances the efficiency of I/O operations by temporarily storing data in a buffer. This helps to manage differences in speed between various devices and processes, reduces waiting times, and improves overall system performance. However, it also comes with challenges such as increased memory usage and the potential for buffer overflow.

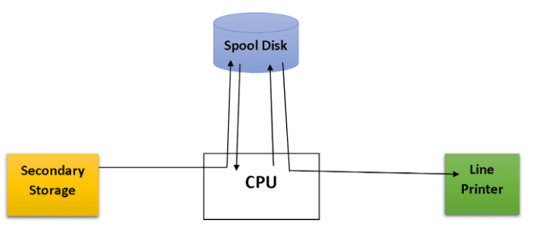

Spooling in Operating Systems

Definition:

Spooling (Simultaneous Peripheral Operations On-Line) is a technique used to manage and optimize I/O operations by temporarily storing data in a buffer, known as a spool, until it can be processed by a device or system. This helps in managing input and output operations efficiently, especially when dealing with devices that operate at different speeds.

How Spooling Works:

- Buffer Creation: A spool is created as a buffer to hold data temporarily. This buffer can be located in main memory or secondary storage (disk).

- Data Storage: When a device sends data to be processed, it is first stored in the spool rather than being sent directly to the device. This allows the faster devices to continue their operations without waiting for slower devices to catch up.

- Sequential Processing: Data stored in the spool is processed sequentially as the slower device becomes available. This ensures that the CPU and other devices are not idle while waiting for I/O operations to complete.

- CPU and I/O Overlap: By storing data in the spool, the system allows the CPU to continue working on other tasks while I/O operations are handled in the background.

Example:

- Printing: When multiple documents are sent to a printer, they are first stored in a spool. The printer then processes these documents one by one from the spool, while other tasks and processes continue to use the CPU without interruption. This prevents the CPU from being idle while waiting for the printer to finish.

Advantages:

- Efficient Resource Utilization: Utilizes disk space as a large buffer, allowing I/O devices to operate at their full speed while the CPU continues to work on other tasks.

- Reduced Idle Time: Minimizes CPU idle time by overlapping I/O operations with CPU processing.

- Task Management: Manages multiple input and output tasks efficiently, preventing the CPU from becoming idle and improving overall system performance.

Disadvantages:

- Storage Requirements: Spooling requires significant storage space, especially when handling large volumes of requests and multiple input devices. This can lead to increased disk traffic and reduced performance if the disk becomes full.

- Increased Disk Traffic: High volume of data and active input devices can cause the secondary storage to become slower due to increased traffic, impacting the overall performance of the system.

Summary: Spooling is a crucial technique in modern operating systems that enhances the efficiency of I/O operations by using a buffer (spool) to manage and optimize data transfers between fast and slow devices. It allows for overlapping of CPU and I/O operations, improving system performance and reducing idle times. However, it also requires careful management of storage and disk traffic to avoid potential performance issues.

Operating System Components

An operating system (OS) is a complex software system that manages hardware resources and provides a range of services for computer programs. Here are the primary components of an operating system:

- Kernel:

- Definition: The core component of an OS that manages system resources and hardware. It operates in privileged mode and is responsible for low-level tasks.

- Functions:

- Process Management: Handles process scheduling, creation, and termination.

- Memory Management: Manages the allocation and deallocation of memory to processes.

- Device Management: Interfaces with hardware devices through device drivers.

- File System Management: Provides access to files and directories on storage devices.

- Process Management:

- Definition: The component responsible for managing the execution of processes.

- Functions:

- Process Scheduling: Determines the order in which processes are executed.

- Process Control: Manages process creation, synchronization, and communication.

- Context Switching: Saves and restores the state of processes during context switches.

- Memory Management:

- Definition: The component responsible for managing the system’s memory.

- Functions:

- Allocation and Deallocation: Assigns and frees memory for processes.

- Paging and Segmentation: Handles memory management techniques to efficiently use memory.

- Virtual Memory: Extends the apparent amount of memory by using disk space.

- File System:

- Definition: Manages files and directories on storage devices.

- Functions:

- File Operations: Provides mechanisms for creating, reading, writing, and deleting files.

- Directory Management: Organizes files into directories and subdirectories.

- Access Control: Manages permissions and access rights for files and directories.

- Device Drivers:

- Definition: Software modules that provide an interface between the OS and hardware devices.

- Functions:

- Hardware Communication: Facilitates communication between the OS and hardware devices.

- Device Control: Manages device operations and data transfer.

- User Interface (UI):

- Definition: The component that provides a way for users to interact with the OS.

- Types:

- Command-Line Interface (CLI): Allows users to interact with the OS through text-based commands.

- Graphical User Interface (GUI): Provides a visual interface with windows, icons, and menus for user interaction.

- System Libraries:

- Definition: Collections of pre-written code that applications can use to perform common tasks.

- Functions:

- Function Calls: Provides standard functions and routines for applications.

- API: Offers an Application Programming Interface for application development.

- System Utilities:

- Definition: Programs that perform maintenance and management tasks.

- Functions:

- File Management: Tools for managing files and directories (e.g., copy, move, delete).

- System Monitoring: Utilities for monitoring system performance and resources.

- Backup and Recovery: Tools for data backup and recovery.

- Security and Protection:

- Definition: Mechanisms to protect the OS and data from unauthorized access and threats.

- Functions:

- Authentication: Verifies user identities through passwords, biometrics, etc.

- Authorization: Manages user permissions and access rights.

- Encryption: Protects data by converting it into a secure format.

- Networking:

- Definition: Manages network connections and communication between systems.

- Functions:

- Network Protocols: Implements protocols for data exchange (e.g., TCP/IP).

- Network Services: Provides services such as file sharing, printing, and remote access.

Each component plays a critical role in ensuring that the operating system functions efficiently, providing a stable and user-friendly environment for running applications and managing hardware resources.

Operating System Services

Operating systems provide various services to manage hardware and software resources and facilitate user and application interactions. Here’s an overview of the primary services provided by an operating system:

- Process Management:

- Process Creation and Termination: Services to start and stop processes.

- Process Scheduling: Manages the execution order of processes to ensure efficient CPU utilization.

- Process Synchronization: Coordinates processes to prevent conflicts and ensure data consistency.

- Inter-Process Communication (IPC): Provides mechanisms for processes to communicate and synchronize with each other (e.g., message passing, shared memory).

- Memory Management:

- Memory Allocation: Allocates and deallocates memory to processes as needed.

- Virtual Memory: Extends physical memory by using disk space, allowing processes to use more memory than is physically available.

- Paging and Segmentation: Manages memory in units (pages or segments) to improve efficiency and isolation.

- File System Services:

- File Creation and Deletion: Services to create, delete, and manage files and directories.

- File Access: Provides mechanisms for reading, writing, and modifying files.

- Directory Management: Organizes files into directories and supports navigation and organization.

- File Protection: Manages access controls and permissions to secure files and directories.

- Device Management:

- Device Drivers: Interfaces with hardware devices to control their operation.

- Device Communication: Manages data transfer between devices and the OS.

- Device Allocation: Allocates devices to processes and ensures proper utilization.

- User Interface Services:

- Command-Line Interface (CLI): Provides a text-based interface for users to interact with the OS.

- Graphical User Interface (GUI): Offers a visual interface with windows, icons, and menus for user interactions.

- Input and Output Handling: Manages user input from devices (e.g., keyboard, mouse) and output to devices (e.g., monitor, printer).

- Networking Services:

- Network Communication: Facilitates data exchange between computers over a network.

- Network Protocols: Implements network protocols like TCP/IP for reliable communication.

- Network Services: Provides services such as file sharing, remote access, and internet connectivity.

- Security and Protection:

- Authentication: Verifies user identities to ensure authorized access.

- Authorization: Manages user permissions and access rights to system resources.

- Encryption: Protects data by converting it into a secure format to prevent unauthorized access.

- Auditing: Records and monitors system activities to detect and respond to security breaches.

- System Performance Monitoring:

- Resource Utilization: Monitors CPU, memory, disk, and network usage to ensure efficient performance.

- System Diagnostics: Provides tools for diagnosing and troubleshooting system issues.

- Performance Tuning: Adjusts system settings to optimize performance.

- System Maintenance:

- Backup and Recovery: Manages data backup processes and recovery from system failures or data loss.

- Software Updates: Facilitates the installation of OS updates and patches to improve functionality and security.

- Resource Management: Manages and allocates system resources efficiently to maintain system stability.

- Application Support:

- Application Programming Interface (API): Provides APIs for applications to interact with the OS and perform system-level operations.

- Library Services: Offers standard libraries and routines for application development and execution.

These services are essential for the effective operation of an operating system, providing the necessary infrastructure for managing hardware, executing applications, and ensuring security and efficiency.

System call

Definition of System Call

A system call is a request made by a user-level application to the operating system to perform a specific task or access hardware resources that the application does not have direct permission to manage. System calls provide a controlled interface for applications to interact with the OS kernel, allowing them to execute privileged operations such as file manipulation, process control, and communication.

Real-Life Example of a System Call

Example: Printing a Document

Imagine you want to print a document from a word processing application. Here’s how a system call would be involved in this process:

- Application Request:

- You open the word processing application and click the “Print” button to print your document.

- System Call Invocation:

- The application uses a system call to request the operating system to handle the print job. In this case, the system call might be

print()or a more specific call depending on the OS and its API.

- The application uses a system call to request the operating system to handle the print job. In this case, the system call might be

- Context Switch to Kernel Mode:

- The operating system transitions from user mode to kernel mode to execute the request. This involves switching the CPU’s execution context to a privileged mode where the OS can directly interact with hardware.

- Executing the Request:

- The OS processes the print request by:

- Opening the Printer Device: The OS locates the printer device driver and prepares it for communication.

- Sending Data to the Printer: The document data is transferred from the application to the printer through the OS. This may involve buffering the data and managing the print queue.

- The OS processes the print request by:

- Return to User Mode:

- Once the print job is successfully sent to the printer, the OS switches back to user mode and the application resumes normal operation.

- Printer Output:

- The printer processes the data and prints the document.

Summary of Steps with System Calls:

- Application calls a system call (e.g.,

print()). - Operating System switches to kernel mode.

- OS Kernel processes the request (interacts with the printer hardware).

- Printer outputs the document.

Example of a System Call:

In Unix-like systems, the write() system call allows a program to write data to a file or device. Here’s a simplified example in C:

#include <unistd.h>

int main() {

const char *msg = "Hello, World!";

write(1, msg, 13); // 1 is the file descriptor for stdout

return 0;

}

In this example:

write() is a system call that writes the string "Hello, World!" to the standard output (stdout).

The 1 parameter is the file descriptor for stdout.

The 13 parameter is the number of bytes to write.Summary:

System calls provide the essential interface between user programs and the operating system, enabling applications to perform operations like file handling, process management, and device interaction. They ensure that user programs can access necessary OS services while maintaining system security and stability.

Types of System calls

System calls can be categorized into several types based on the functionality they provide. Here’s a breakdown of the main types of system calls:

1. Process Control

These system calls manage process creation, execution, and termination.

fork(): Creates a new process by duplicating the calling process.exec(): Replaces the current process image with a new process image.wait(): Waits for a child process to finish its execution.exit(): Terminates the calling process.getpid(): Retrieves the process ID of the calling process.kill(): Sends a signal to a process to terminate or handle specific actions.

2. File Management

These system calls handle file creation, manipulation, and deletion.

open(): Opens a file and returns a file descriptor.read(): Reads data from a file.write(): Writes data to a file.close(): Closes an open file descriptor.unlink(): Deletes a file.lseek(): Moves the file pointer to a specific location.chmod(): Changes file permissions.

3. Memory Management

These system calls manage memory allocation and deallocation.

mmap(): Maps files or devices into memory.munmap(): Unmaps a region of memory.brk(): Changes the data segment size (used for dynamic memory allocation).

4. Device Management

These system calls interact with hardware devices.

ioctl(): Controls hardware devices by sending device-specific commands.read(): Performs input operations on devices.write(): Performs output operations on devices.

5. Information Management

These system calls provide system information and manage system resources.

getcwd(): Gets the current working directory.uname(): Retrieves system information.sysinfo(): Provides information about the system’s memory usage and other statistics.

6. Communication

These system calls facilitate communication between processes.

pipe(): Creates a pipe for inter-process communication.socket(): Creates a network socket for communication.bind(): Binds a socket to a specific address and port.listen(): Listens for incoming connections on a socket.accept(): Accepts an incoming connection on a socket.connect(): Connects to a remote socket.

7. File System Management

These system calls manage file system operations.

stat(): Retrieves information about a file (e.g., size, permissions).mkdir(): Creates a new directory.rmdir(): Removes a directory.

Summary of Types of System Calls:

- Process Control: Manage process creation, execution, and termination.

- File Management: Handle file operations like creation, reading, writing, and deletion.

- Memory Management: Manage memory allocation and deallocation.

- Device Management: Interface with hardware devices for input/output operations.

- Information Management: Provide and manage system information.

- Communication: Facilitate communication between processes or over networks.

- File System Management: Manage file and directory operations in the file system.

These system calls provide the essential functions required for managing processes, files, memory, devices, and communication within an operating system.

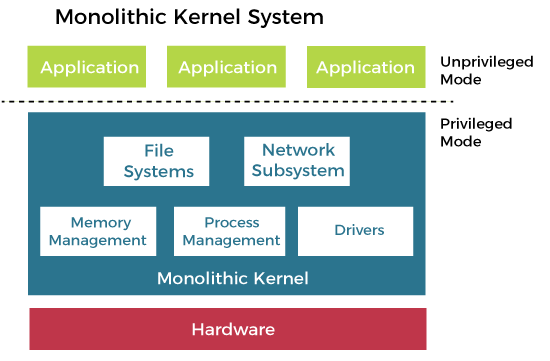

Monolithic Operating System

Definition: A monolithic operating system is one where the entire operating system, including core services such as device management, memory management, file management, and process management, runs in a single, large kernel. In this architecture, all system resources and services are provided within the kernel space, with no strict separation between the kernel and user-space processes.

Structure of Monolithic Operating system

Characteristics of Monolithic Architecture

- Kernel Space:

- The kernel handles all operating system functions in a single address space. This includes device drivers, file systems, process scheduling, and memory management.

- Single Binary:

- The entire operating system is compiled into a single, large binary executable. There is no modular separation between different system components.

- High Performance:

- System calls can be executed directly in kernel space without the overhead of context switching or inter-process communication (IPC). This can result in high performance for system operations.

- Simplicity in Design:

- The design is straightforward since all system services are integrated into one kernel. There is no need for complex IPC mechanisms to communicate between different system components.

Advantages of Monolithic Architecture

- Performance:

- Direct access to kernel services without the overhead of message passing or context switching can lead to better performance.

- Simplicity:

- The architecture is simple because all core functionalities are included in a single binary, making it easier to develop and maintain in a cohesive environment.

Disadvantages of Monolithic Architecture

- Security and Stability:

- Since all code runs in kernel space, any bugs or vulnerabilities in the kernel can potentially compromise the entire system. A fault in one part of the kernel can affect the stability and security of the whole OS.

- Lack of Modularity:

- It is difficult to update or replace individual components of the operating system without affecting the entire kernel. This can complicate maintenance and upgrades.

- Complexity of Debugging:

- Debugging issues can be challenging since a single bug in any part of the kernel can lead to system crashes or unpredictable behavior.

- Single Point of Failure:

- A crash in any kernel component can bring down the entire system, leading to a lack of robustness in handling faults.

Comparison with Microkernel Architecture

Microkernel:

- Design: The microkernel architecture separates the kernel into a minimal core (microkernel) that handles only essential functions such as IPC and low-level hardware interaction. Other services (e.g., file systems, device drivers) run in user space as separate processes.

- Advantages: Improved security and stability since faults in user-space services do not directly affect the kernel. More modular design allows for easier updates and maintenance.

- Disadvantages: Potential performance overhead due to context switching and IPC between user-space services and the microkernel.

Monolithic Kernel:

- Design: Combines all system services into a single large kernel. There is no strict separation between kernel and user space.

- Advantages: High performance and simplicity in design.

- Disadvantages: Lower security and stability due to the lack of modularity and potential for system-wide impact from kernel faults.

Historical Context

Monolithic kernels were common in early operating systems introduced in the 1970s, including early versions of UNIX and DOS. They were designed to maximize performance with the technology available at the time, but modern operating systems often use more modular approaches to balance performance with security and maintainability.

Summary

The monolithic architecture is characterized by a single, large kernel that provides all system services. While it offers high performance and simplicity, it also poses challenges in terms of security, stability, and maintainability. Modern systems may employ microkernel or hybrid approaches to address some of these limitations while retaining high performance.

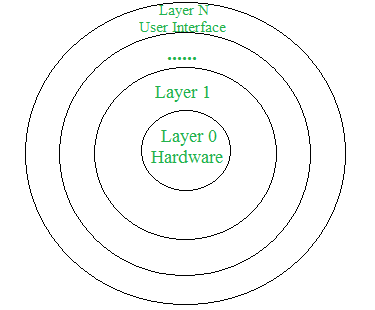

Layered Operating System Structure

Definition: A layered operating system structure organizes the operating system into multiple layers, each with a specific, well-defined function. The layers are arranged hierarchically, with each layer building upon the services provided by the layers below it. This design aims to improve upon simpler structures, such as monolithic or simple systems, by promoting modularity and abstraction.

Design Analysis:

- Layer Organization:

- Outermost Layer: The User Interface (UI) layer, which interacts with users and applications.

- Innermost Layer: The Hardware layer, which interacts directly with the computer’s hardware.

- Intermediate Layers: Various layers between the UI and hardware layers, each responsible for specific functions such as process management, memory management, and file systems.

- Access Rules:

- Each layer can access only the services provided by the layers below it.

- A layer cannot access the services of layers above it. For example, layer n-1 can access services from layers n-2 through 0 but not from layer n.

- To interact with hardware, the request travels through all intermediate layers from the UI layer down to the hardware layer.

Advantages:

- Modularity:

- Each layer is responsible for a specific set of tasks, promoting a modular design. This makes it easier to understand, develop, and maintain the system.

- Easy Debugging:

- Errors can be isolated to specific layers. For example, if an issue arises in the CPU scheduling layer, developers can focus solely on that layer without having to consider unrelated parts of the system.

- Easy Update:

- Changes or updates to one layer do not necessarily affect other layers. This allows for more flexible and manageable system maintenance.

- No Direct Access to Hardware:

- The hardware layer is isolated, preventing direct user access. This helps to protect the hardware from potential misuse or damage.

- Abstraction:

- Each layer only needs to interact with the layers below it and is abstracted from the details of higher layers. This simplifies the design and implementation of each layer.

Disadvantages:

- Complex Implementation:

- The layered approach requires careful planning to ensure that each layer is correctly positioned and interacts appropriately with other layers. Misplacement or incorrect design can lead to inefficiencies and errors.

- Slower Execution:

- Communication between layers involves traversing through intermediate layers. This can introduce overhead and increase response time compared to monolithic systems where all services are directly accessible.

- Increased Overhead:

- The additional layers can add overhead due to the need to pass requests and responses through multiple layers, potentially affecting system performance.

Example:

- Windows NT: An example of a layered operating system that uses this architecture. It includes a variety of layers such as the hardware abstraction layer (HAL), kernel mode, executive services, and user mode.

Summary:

The layered operating system structure provides a modular, abstracted approach to system design, improving maintainability and ease of debugging. However, it introduces complexity and potential performance overhead due to the need to traverse multiple layers for inter-layer communication. This design approach balances the benefits of modularity and abstraction with the trade-offs in execution efficiency.